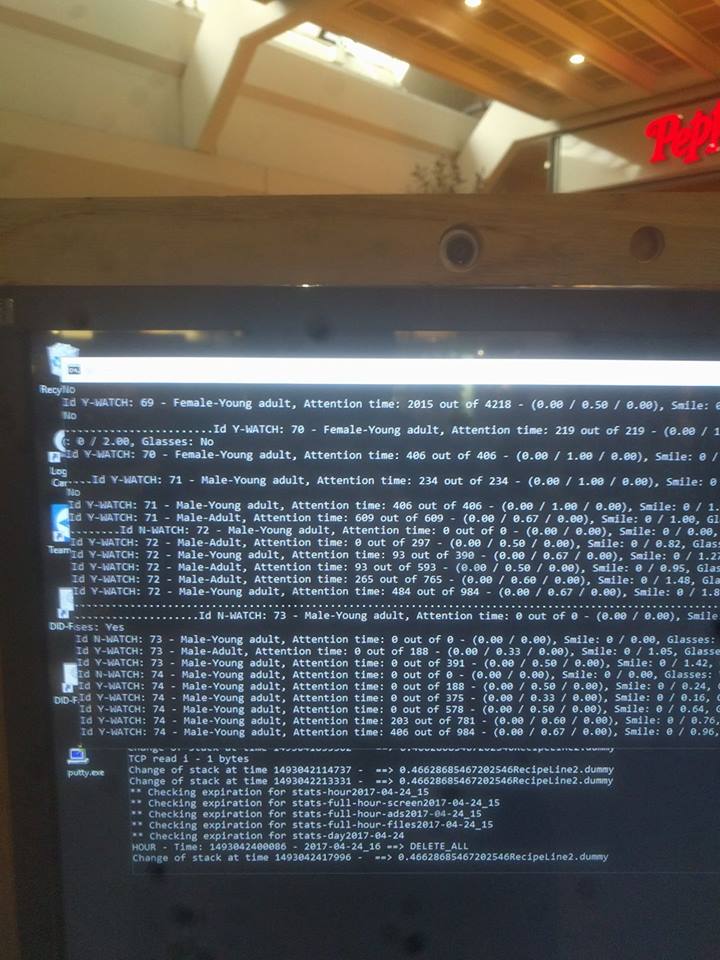

From Twitter, a “crashed” advertisement reveals the kinds of data being recorded.

Male-Young adult, Attention time: 484 out of 984, Smile: 0 / 1.8…Glasses: Yes

It’s interesting to note the kind of information being interpreted and recorded: gender, age, “attention”, degree of smiling, the presence of glasses; all transformations from (presumably) camera input compared against pre-trained classification models. How accurate might/could this data be? Into what models could/does this data then flow?

https://twitter.com/GambleLee/status/862307447276544000/photo/1