In Algorhythmics: Understanding Micro-Temporality in Computational Cultures, Shintaro Miyazaki discusses the importance of rhythm to understand the performances of algorithms.

“According to Burton [chief engineer of the Manchester Small-Scale Experimental Machine-Resurrection-Project], the position of the so-called “noise probe” was variable, thus different sound sources could be heard and auscultated. These could be individual flip-flops in different registers, different data bus nodes or other streams and passages of data traffic. Not only was passive listening of the computer-operations very common, but was also an active exploration of the machine, listening to its rhythms. Burton continues,

“Very common were contact failures where a plug-in package connector to the ‘backplane’ had a poor connection. These would be detected by running the Test Program set to continue running despite failures (not always possible, of course), and then listening to the rhythmic sound while going round the machine tapping the hardware with the fingers or with a tool, waiting for the vibration to cause the fault and thus change the rhythm of the ‘tune’.”

The routine of algorhythmic listening was widespread, but quickly forgotten. One convincing reason for the lack of technical terms such as algorhythm or algorhythmic listening is the fact that the term algorithm itself was not popular until the 1960s. Additionally, in the early 1960s many chief operators and engineers of mainframe computers were made redundant, since more reliable software-based operators called operating systems were introduced. These software systems were able to listen to their own operations and algorhythms by themselves without any human intervention. Scientific standards and rules of objectivity might have played an important role as well, since listening was more an implicit form of knowledge than any broadly accepted scientific method. Diagrams, written data or photographic evidence were far more convincing in such contexts. Finally, the term rhythm itself is rarely used in the technical jargon – instead the term ‘clock’ or ‘pulse’ is preferred.”

Read the full paper

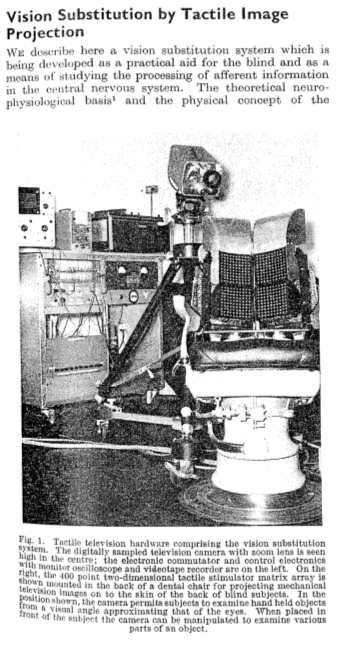

This practice of close listening has been documented at the Natuurkundig Laboratorium in Eindhoven. As explained on Ip’s Ancient Wonderworld:

“The head of the NatLab at the time, a certain Natuurkundig Laboratorium in EindhovenW. Nijenhuis, had the idea of installing a small amplifier and loudspeaker on the PASCAL, which would pick up radio frequency interference generated by the machine. Unsurprisingly, the usual course of events unfolds: While actually intended for diagnostic purposes, people quickly discovered that they could abuse the speaker to make simple music. But Mr Nijenhuis, rather than scolding his staff for the waste of precious calculation time, actually decides to record those rekengeluiden (computing noises) on a 45 rpm vinyl.”

Read more

Listen to the recordings